Google has taken a significant leap forward in the realm of artificial intelligence with the introduction of real-time screen and camera feed interpretation capabilities for its Gemini AI assistant. Available now to select Google One AI Premium subscribers, these features mark a transformative moment in how users interact with AI, enabling Gemini to “see” and analyze visual data in real-time. This development underscores Google’s continued dominance in the AI assistant space, leaving competitors like Amazon’s Alexa and Apple’s Siri playing catch-up.

The new features, powered by Google’s “Project Astra” initiative, allow Gemini to process and respond to visual information from a user’s smartphone screen or camera feed. This means users can now point their phone at an object, scene, or even their device’s screen, and Gemini will provide instant, context-aware answers or suggestions. For instance, a user could hold their phone up to a piece of pottery and ask Gemini for advice on choosing a paint color, or they could share their screen and ask the AI to explain a complex graph or troubleshoot an error message.

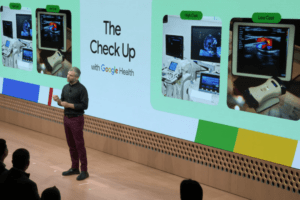

The rollout of these capabilities comes nearly a year after Google first teased Project Astra during its I/O 2024 developer conference. At the time, the company demonstrated how Astra could enable AI assistants to understand and interact with the world in real-time, blending visual and conversational AI in ways that felt almost futuristic. Now, that vision is becoming a reality for Gemini Advanced subscribers, who are part of the Google One AI Premium plan.

A Reddit user recently shared a video showcasing Gemini’s new screen-reading ability, which appeared on their Xiaomi phone. The video demonstrates how the AI can analyze and interpret on-screen content, providing helpful insights or answering questions based on what it “sees.” This functionality is particularly promising for tasks like troubleshooting technical issues, learning new software, or even translating foreign text directly from a screen.

In addition to screen reading, Gemini’s live video interpretation feature is equally impressive. By accessing the smartphone camera feed, the AI can analyze real-world scenes and offer contextually relevant responses. For example, in a demonstration video published by Google earlier this month, a user asks Gemini for advice on selecting a paint color for a piece of pottery. The AI not only identifies the object but also provides tailored suggestions, showcasing its ability to understand and interact with the physical world in meaningful ways.

Google’s spokesperson, Alex Joseph, confirmed the rollout in an email to The Verge, emphasizing that these features are part of the company’s ongoing efforts to enhance Gemini’s capabilities and deliver more intuitive, personalized AI experiences. The timing of this rollout is particularly noteworthy, as it comes amid intensifying competition in the AI assistant market.

Amazon, for instance, is preparing to launch its Alexa Plus upgrade, which promises similar real-time interaction capabilities. However, the service is still in limited early access, and its full potential remains to be seen. Apple, on the other hand, has reportedly delayed the release of its upgraded Siri, leaving Google with a clear lead in the race to redefine AI assistance. Even Samsung, which continues to support its Bixby assistant, has made Gemini the default AI on its latest smartphones, further solidifying Google’s position as the industry frontrunner.

The implications of Gemini’s new features extend far beyond convenience. By enabling real-time visual interpretation, Google is pushing the boundaries of what AI assistants can do, opening up a world of possibilities for both personal and professional use. For students, this could mean having an AI tutor that can explain complex diagrams or equations in real-time. For professionals, it could translate into a virtual assistant capable of analyzing spreadsheets, debugging code, or even providing design feedback.

However, the rollout also raises important questions about privacy and data security. Allowing an AI to access and analyze screen content or camera feeds in real-time requires a high degree of trust in how that data is handled. Google has assured users that all interactions with Gemini are encrypted and processed locally whenever possible, minimizing the risk of sensitive information being exposed. Still, as these features become more widespread, it will be crucial for the company to maintain transparency and ensure robust safeguards are in place.

For now, the response from early adopters has been overwhelmingly positive. Many users have praised the seamless integration of Gemini’s new capabilities, noting how intuitive and responsive the AI feels in real-world scenarios. As more people gain access to these features, it’s likely that demand for the Google One AI Premium plan will grow, further cementing Gemini’s position as the most advanced AI assistant on the market.

Looking ahead, Google’s continued investment in AI innovation suggests that this is just the beginning. With Project Astra serving as the foundation for Gemini’s evolving capabilities, the company is well-positioned to introduce even more groundbreaking features in the coming months. Whether it’s enhancing real-time collaboration, enabling more sophisticated visual analysis, or integrating with other Google services, the future of AI assistance looks brighter than ever.

For now, Gemini’s ability to “see” and interpret the world in real-time represents a major milestone in the evolution of AI. As users begin to explore these new features, one thing is clear: the line between human and machine intelligence is becoming increasingly blurred, and Google is leading the charge.

Add Comment