Imagine gazing into a crystal ball, trying to decipher the intricate workings of a fortune teller’s predictions. That’s often how we feel when confronted with AI models – powerful yet opaque, churning out predictions with seemingly little rhyme or reason. But fear not, for a ray of clarity shines through the fog of complexity: AI model interpretability.

Why Explainability Matters:

Just like we wouldn’t trust a medical diagnosis without understanding the reasoning behind it, blind acceptance of AI predictions can be perilous. Explainable AI aims to lift the veil of mystery, shedding light on how models arrive at their decisions. This transparency is crucial for:

- Building Trust: When we understand how AI models work, we’re more likely to trust their outputs, fostering better human-AI collaboration.

- Debugging and Error Detection: By pinpointing the factors influencing a model’s decisions, we can identify and rectify biases, errors, and unfair outcomes.

- Compliance and Fairness: Explainability becomes crucial in regulated sectors like healthcare and finance, ensuring compliance with ethical guidelines and preventing AI-driven discrimination.

The Toolbox of Interpretability:

Fortunately, we’re not left grasping at shadows. A toolkit of interpretability techniques empowers us to peel back the layers of AI:

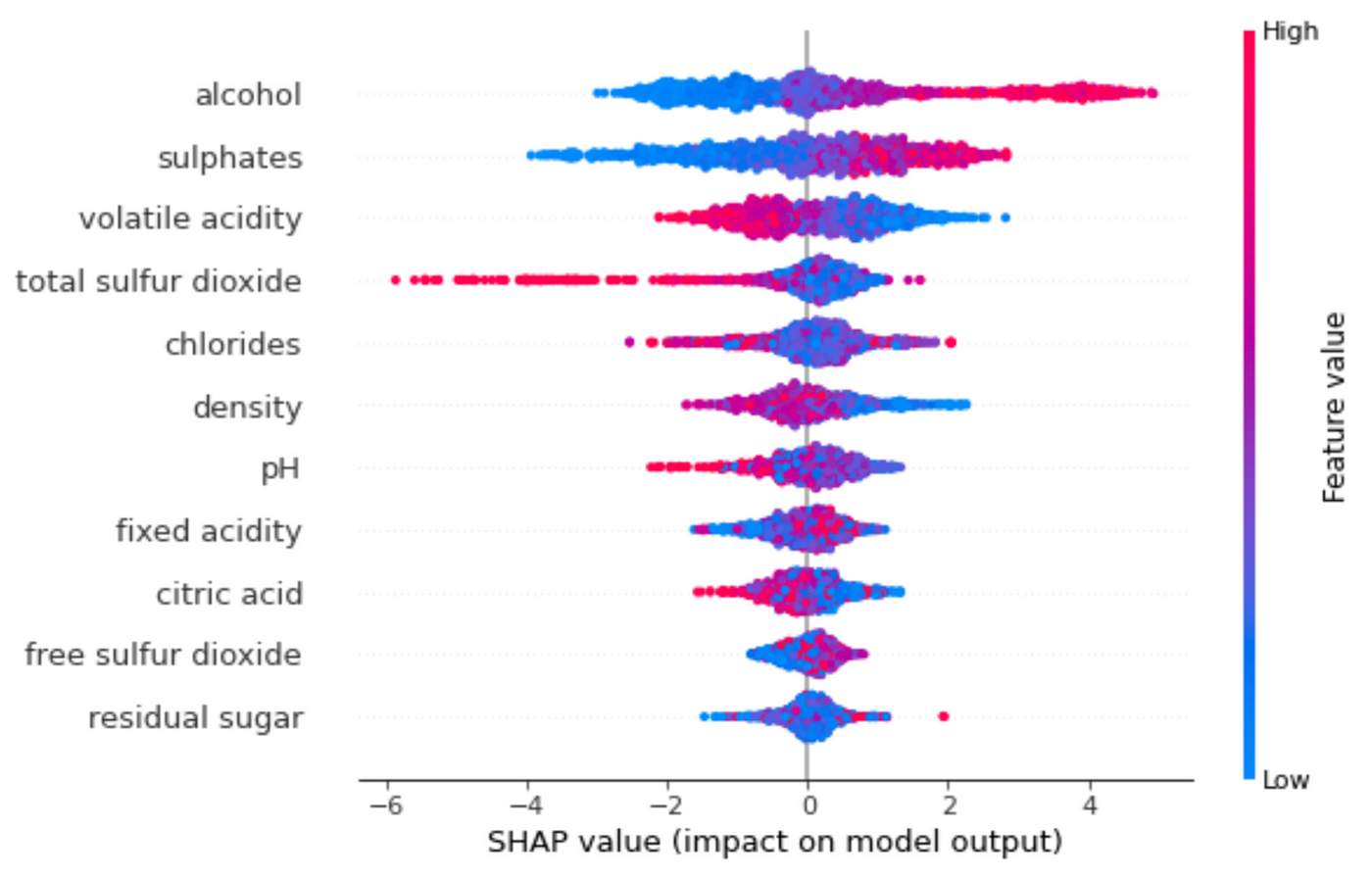

- Feature Importance: Techniques like SHAP and LIME identify the features that hold the most weight in a model’s decision, highlighting the drivers behind its predictions.

SHAP and LIME techniques visualizing feature importance

- Counterfactual Explanations: These “what-if” scenarios explore how changes in input data might alter the model’s output, providing insights into its reasoning process.

Counterfactual Explanations in AI

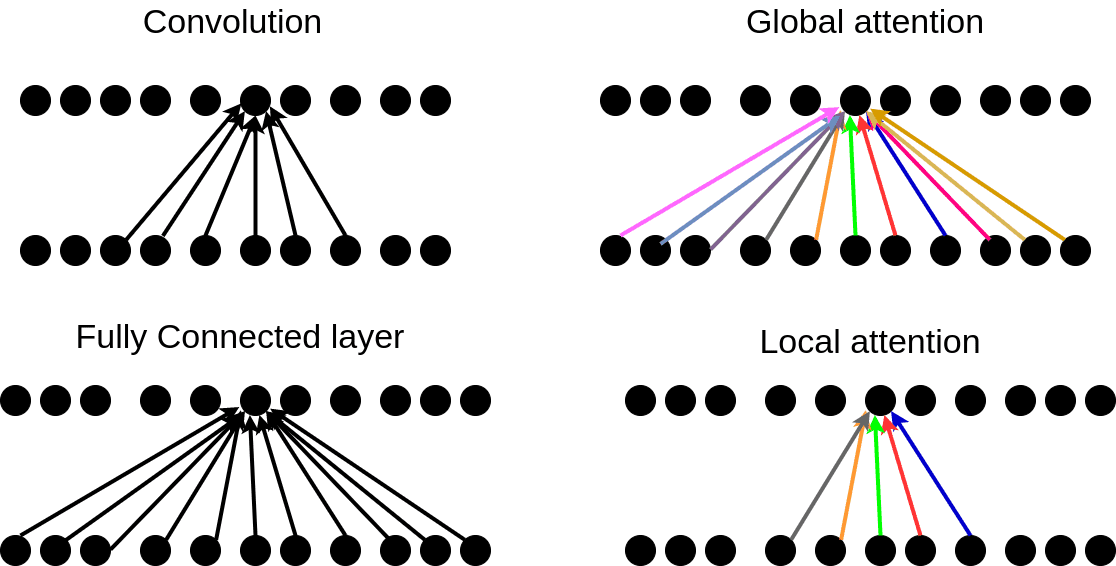

- Attention Mechanisms: In natural language processing, attention mechanisms reveal which parts of an input text the model focuses on when making a prediction, offering a glimpse into its internal thought process.

Attention Mechanisms in AI

Challenges and the Road Ahead:

While the path to interpretable AI is paved with progress, hurdles remain:

- Complexity Barrier: Highly complex models, like deep neural networks, can be notoriously difficult to explain, pushing the boundaries of current interpretability techniques.

- Trade-off between Performance and Explainability: Often, simpler, more transparent models come at the cost of reduced accuracy. Striking the right balance between explainability and performance remains a challenge.

- Human Biases in Explanations: Interpretability tools themselves can introduce human biases, if not carefully designed and used responsibly.

Despite these challenges, the quest for interpretable AI is unstoppable. Through continued research and collaboration, we can unlock the full potential of this technology, building not just powerful, but also trustworthy and transparent AI systems for a better future.”

Add Comment