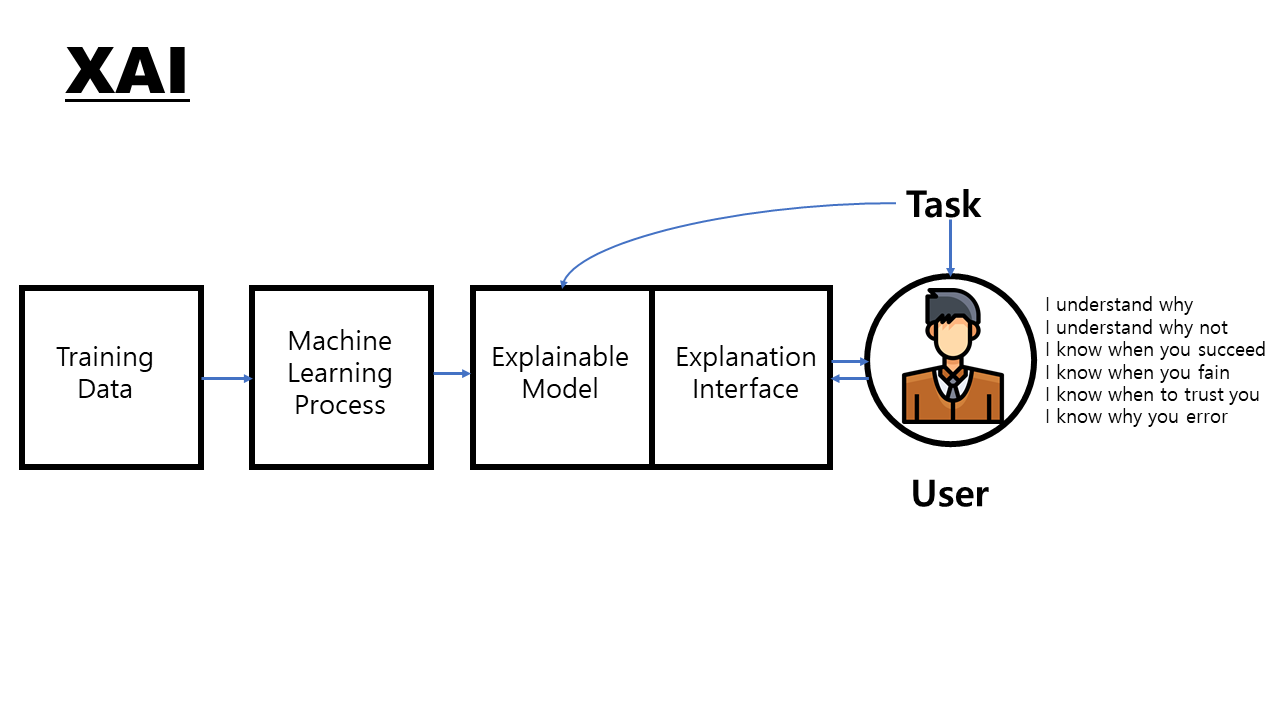

Imagine a powerful decision-making tool, capable of crunching vast amounts of data and spitting out precise predictions. Now imagine that very tool operates like a black box, its internal workings shrouded in mystery. This opaque reality is the current state of many Artificial Intelligence (AI) systems, raising concerns about fairness, accountability, and ultimately, trust.

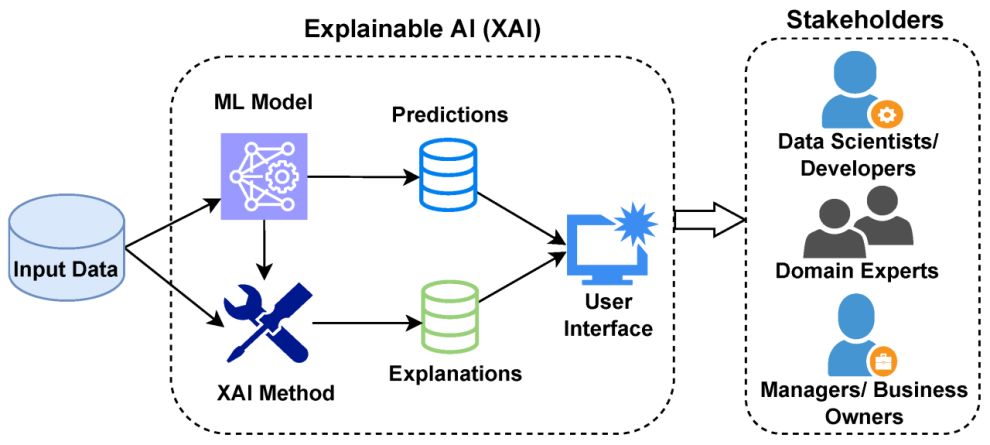

Enter Explainable and Interpretable AI (XAI), a burgeoning field dedicated to cracking open the algorithmic black box and illuminating the inner workings of AI models. By fostering transparency, XAI paves the way for ethical and responsible AI development, ensuring decisions are not blindly accepted but rigorously understood.

Why Explainability Matters: Beyond the Black Box

The “black box” nature of traditional AI algorithms raises several critical concerns:

- Fairness: Without understanding how an AI model arrives at a decision, it’s impossible to identify and address potential biases embedded within the data or the algorithm itself. This can lead to discriminatory outcomes, impacting individuals and communities unfairly.

- Accountability: When AI systems make consequential decisions, such as loan approvals or criminal justice predictions, who holds the line if things go wrong? XAI helps identify root causes of errors, enabling accountability and fostering trust in the technology.

- Deception and Manipulation: Opaque algorithms are vulnerable to manipulation through adversarial attacks, potentially leading to harmful outcomes. XAI techniques can help detect such manipulations and ensure the integrity of AI models.

Shining a Light: XAI Techniques in Action

Fortunately, researchers and developers are rapidly innovating XAI techniques to make AI more transparent. Here are a few key approaches:

- Local Interpretable Model-Agnostic Explanations (LIME): This technique uses simpler, interpretable models to explain the predictions of complex AI models for specific instances.

- Feature Importance: Identifying the features that play the most significant role in a model’s decision helps understand which factors have the biggest impact on the outcome.

- Counterfactual Explanations: Imagine asking “what if?” scenarios to an AI model. Counterfactuals explore how changing specific input features would affect the model’s prediction, aiding understanding of its decision-making logic.

- Visualizations: Interactive visualizations can make complex model behavior more easily digestible by humans, allowing them to explore how changes in input data translate to changes in predictions.

Beyond Technique: Building Trustworthy AI

While XAI techniques offer valuable tools, it’s crucial to remember that they are not a silver bullet. Building truly trustworthy AI requires a multifaceted approach:

- Human-in-the-loop design: Integrating human oversight and understanding into AI systems ensures decisions align with ethical considerations and human values.

- Data transparency and governance: Ensuring high-quality, unbiased data forms the foundation for fair and accountable AI models.

- Algorithmic auditing and monitoring: Regularly assessing AI systems for biases, errors, and potential vulnerabilities is crucial for maintaining trust.

Conclusion: Towards a Brighter Future of AI

Explainable and Interpretable AI holds immense potential to unlock the responsible and ethical development of AI technology. By shedding light on the inner workings of AI models, XAI empowers us to build systems that are fair, accountable, and ultimately, trustworthy.

As XAI research continues to evolve, we can envision a future where AI operates not as a black box, but as a transparent partner, working alongside humans to solve complex problems and build a better future for all.

Add Comment