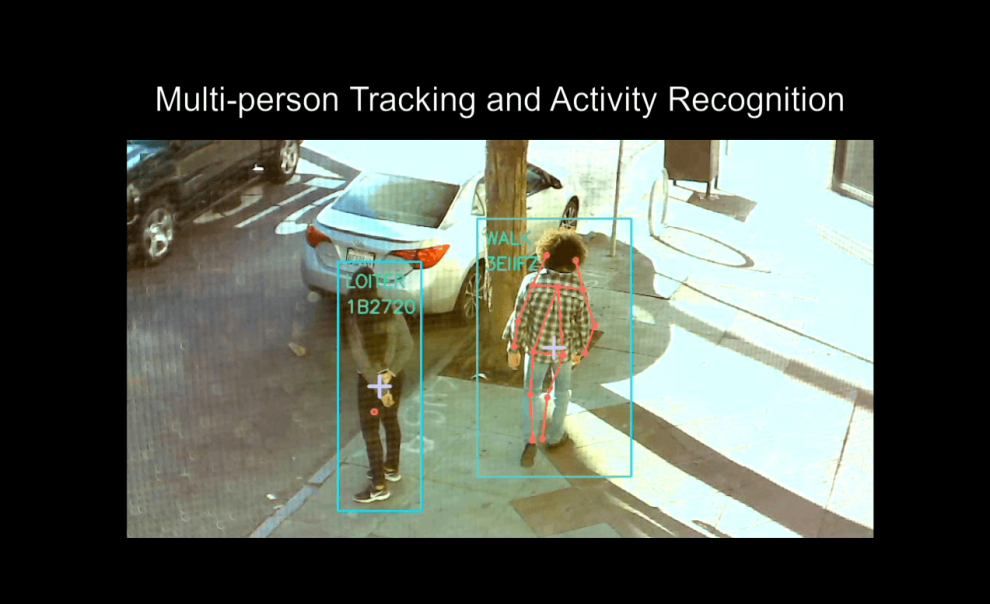

In the era of artificial intelligence (AI), video action recognition (VAR) models have become indispensable for various applications, from self-driving vehicles to security systems. However, the complexity shrouding these models often hinders transparency and trust.

This article delves into the critical need for interactive probing tools that enable stakeholders to peer inside the “black box” of VAR models. We’ll uncover the challenges posed by opaque models, survey enlightening interactive techniques, and spotlight promising areas to bridge the gap between human and machine.

The Risks of VAR Model Opacity

Fueled by expansive video datasets, VAR models can accurately identify human actions within video footage. But their decision-making obscurity raises pressing issues:

- Limited adoption: Stakeholders may hesitate to use VAR outputs they don’t understand, restricting real-world impact.

- Flawed outputs: Pinpointing errors is tricky when model reasoning is veiled in mystery.

- Unfair bias: Monitoring for discrimination grows challenging amid opaque model behaviors.

Without transparency, VAR model potential remains constrained. How can we balance accuracy with accountability?

Lifting the Veil: Interactive Probing Techniques

Interactive probing empowers stakeholders to peer inside VAR model logic by altering inputs and observing responses. Let’s explore illuminating techniques:

Saliency Maps

These visualizations spotlight input regions most influential to VAR predictions. Observing highlighted features provides clues into model reasoning. For instance, consistently prioritizing pedestrians may suggest a self-driving vehicle model unfairly ignores other road users when making critical maneuvering decisions.

Counterfactual Explanation

This approach involves tweaking inputs to determine impact on outputs. For example, blurring a weapon in video footage could reveal whether its presence drives a violent altercation classification. Such probing unmasks models unfairly influenced by certain attributes like race or gender.

Explanation by Example

Here, similar training examples supporting given classifications are presented to stakeholders. By comparing inputs to outputs, users better comprehend model decision policies. For instance, variation among examples classified as “joy” reveals nuances in how models interpret human emotion.

Interactive Input Editing

This technique allows real-time input tweaking, like occluding objects or altering playback speed, illuminating model sensitivity. Unexpected or inconsistent responses indicate areas for improvement, like enhancing robustness to lighting changes.

Building Trust with Interactive Tools

While promising, these techniques remain largely inaccessible to non-technical audiences. To foster wider adoption, here are vital areas for advancement:

Natural Language Interfaces

Conversational interfaces enabling intuitive human queries would significantly boost usability for diverse stakeholders. Plain language interactions build model transparency.

Explainable AI Toolkits

Open-source interactive probing toolkits could proliferate availability, facilitating audits. Integrating such resources into development pipelines promotes responsible VAR deployment.

Evaluation Benchmarks

Standardized assessments measuring probing technique efficacy are essential for improvement. Quantifying transparency fosters model fairness and trust.

Illuminating the Way Forward

Interactive probing dispels shadows veiling opaque VAR models, empowering stakeholders with comprehension. By converging accuracy and accountability, these enlightening tools pave the path for trusted VAR integration across industries. Ultimately, transparency bridges the gap between human and machine, unlocking AI’s remarkable potential.

Add Comment