The promise of artificial intelligence (AI) is vast, its potential to revolutionize industries undeniable. However, amidst its brilliance lurks a shadow: the risk of perpetuating biases and discrimination, even in seemingly objective algorithms. Nowhere is this concern more crucial than in the realm of lending, where access to capital can be life-changing. So, can AI be used to detect unfair lending practices, flipping the script and becoming a tool for digital discrimination safeguards?

The Problem: Algorithmic Bias in Lending

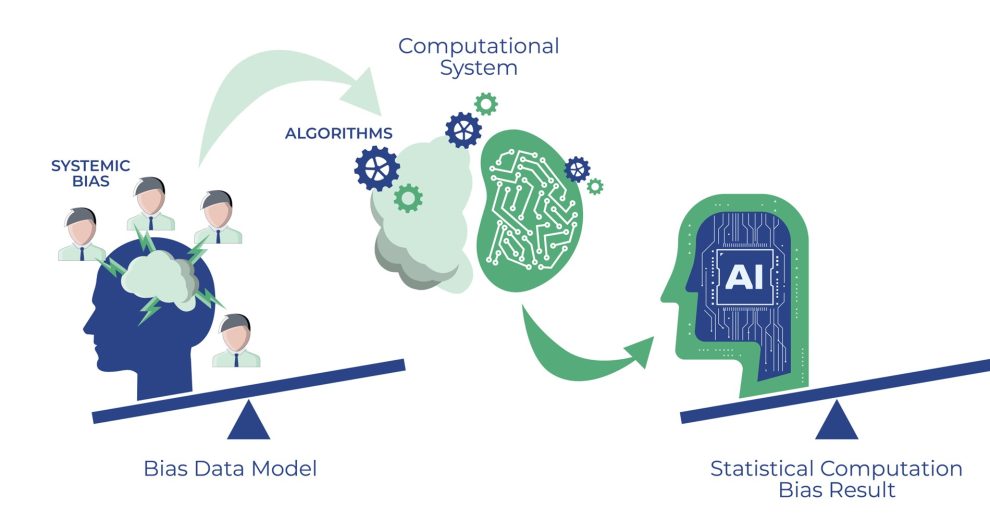

Traditionally, loan approvals relied on human judgment, prone to personal biases. AI offers a seemingly unbiased alternative, analyzing large datasets to evaluate creditworthiness. However, the data itself can be biased, reflecting past inequalities in lending practices. This results in algorithmic bias, where protected groups like minorities or women are unfairly disadvantaged due to factors unrelated to their actual creditworthiness.

The consequences are real. A 2018 study by the Federal Reserve Bank of Boston found that Black and Hispanic borrowers were more likely to be denied mortgages even when they had similar credit scores to white applicants. This systemic discrimination not only harms individuals but also widens the racial wealth gap, perpetuating economic inequality.

The Solution: Using AI for Good

Instead of succumbing to despair, we can leverage AI’s vast capabilities to detect and mitigate these biases. Here are some promising approaches:

- Transparency and Explainability: Black-box algorithms, which make decisions without revealing their reasoning, are fertile ground for bias. Instead, lenders can adopt explainable AI (XAI) models that provide insights into how a decision was made. This allows for scrutiny and identification of potential bias lurking within the algorithm.

- Data Curation and Debiasing: The quality of data determines the quality of AI models. By carefully curating datasets to ensure they are representative and free from historical biases, lenders can build fairer algorithms. Techniques like data augmentation and debiasing algorithms can help achieve this.

- Adversarial Testing: Imagine sending AI models on a scavenger hunt for hidden biases. Adversarial testing involves creating simulated data points designed to expose how the model behaves when dealing with characteristics associated with protected groups. This helps identify and address potential discrimination before it impacts real people.

- Human-in-the-Loop Systems: AI shouldn’t replace human judgment entirely. Hybrid systems, where AI models provide recommendations but final decisions are made by human loan officers with awareness of fair lending practices, can leverage the strengths of both humans and machines while mitigating bias.

Challenges and the Road Ahead

While the potential of using AI for fair lending is exciting, challenges remain. Implementing these solutions requires resources, expertise, and a genuine commitment to ethical AI development. Regulatory frameworks that promote responsible AI deployment in lending are also crucial.

Additionally, AI itself is not a magic bullet. Bias can creep in at various stages, from data collection to algorithm design to implementation. Continuous vigilance and human oversight are essential to ensure AI acts as a force for good, not perpetuating discrimination in a new guise.

Conclusion: A Call to Action

The fight against digital discrimination in lending is a complex one, demanding a multi-pronged approach. Utilizing AI for bias detection has the potential to be a powerful tool, but only if deployed responsibly and ethically. It’s a call to action for lenders, policymakers, and AI developers to collaborate on solutions that ensure access to capital is fair, equitable, and truly data-driven.

Add Comment