On September 25, 2024, OpenAI CEO Sam Altman ignited a fierce debate with a provocative blog post, warning that insufficient AI infrastructure could lead to global conflicts. As I stand outside OpenAI’s headquarters, the tension is palpable, with tech enthusiasts and concerned citizens alike gathering to discuss the implications of Altman’s bold claims.

“If we don’t build enough infrastructure, AI will be a very limited resource that wars get fought over, and that becomes mostly a tool for rich people,” Altman declared in his blog post. His words echo through the tech community, sparking both excitement and concern.

The OpenAI chief’s call to action is clear: massive investment in AI infrastructure is not just desirable; it’s essential for market dominance and global stability. But as I speak with experts and industry insiders, it becomes evident that this vision comes with significant challenges and potential drawbacks.

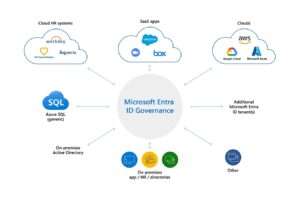

Altman’s plea for increased infrastructure isn’t falling on deaf ears. Just last week, tech giant Microsoft and investment firm BlackRock announced a staggering $30 billion fund aimed at enhancing American competitiveness in AI. This move underscores the growing focus on AI infrastructure within the industry.

Dr. Emily Chen, a prominent AI ethicist, explains the rationale behind this push: “The race for AI supremacy is akin to the space race of the 1960s. Countries and companies alike see AI as the key to future economic and technological dominance.”

However, not everyone is convinced that this breakneck pace of development is in society’s best interest.

As I tour a massive data center on the outskirts of San Jose, the scale of AI infrastructure becomes apparent. Rows upon rows of servers hum ceaselessly, consuming vast amounts of energy and water.

Alex de Vries, founder of Digiconomist, warns of the environmental impact: “If you’re talking about large-scale data centers, they are going to be consuming a huge amount of power and a huge amount of water. The environmental consequences of a big surge in power demand are typically very, very bad — way, way worse than is being acknowledged by the Big Tech companies.”

This sentiment is echoed by Shaolei Ren, associate professor at UC Riverside, who argues for a shift in AI development strategy: “I think we need to shift the way we build AI, not just rely on bigger and bigger models. This is not really sustainable or scalable.”

While proponents of AI infrastructure investment paint a rosy picture of economic growth, some economists are skeptical. De Vries points out: “It costs a whole lot of resources, while data centers don’t generate that many benefits for the local economy — not too many jobs, very little other business activity because no one has to be near a data center.

This economic uncertainty is reflected in recent earnings calls from tech giants like Microsoft and Alphabet, where executives have struggled to provide clear timelines for returns on their massive AI investments.

Beyond environmental and economic concerns, experts warn of potential social upheaval. Professor Cary Coglianese of the University of Pennsylvania cautions: “There’s a real disconnect between where the world is today socially and where it is technologically — and that’s something that the technologists and the techno-optimists can often overlook.”

As we discuss the implications of AI development, Coglianese paints a nuanced picture: “Any human endeavor that involves an optimization challenge can be made more efficient through artificial intelligence. Some of those uses can be good, like finding malignancies on MRI scans — a great use of AI technology — but we’ll also be making much more efficient weapon systems, or automating weapon systems, or creating tools that can oppress people that can be made more, quote-unquote, ‘efficient.'”

Walking through downtown San Francisco, the human impact of AI’s rapid advancement is evident. I speak with Sarah, a graphic designer recently laid off due to AI automation. “It’s not just about jobs,” she says, her voice tinged with frustration. “It’s about the kind of society we’re creating. Are we ready for a world where AI makes so many decisions for us?”

Her concerns are not unfounded. Reports of deepfakes infiltrating political systems and AI-generated revenge porn highlight the dark side of this technological revolution.

As the sun sets over Silicon Valley, casting long shadows across the gleaming facades of tech company headquarters, the complexity of the AI infrastructure debate comes into sharp focus.

Altman’s blog post concludes with a note of cautious optimism: “It will not be an entirely positive story. But the upside is so tremendous that we owe it to ourselves, and the future, to figure out how to navigate the risks in front of us.

Yet, as our exploration reveals, the path forward is far from clear. The push for massive AI infrastructure investment promises technological breakthroughs but also raises serious concerns about environmental sustainability, economic inequality, and social disruption.

As we stand on the brink of what Altman calls the “Intelligence Age,” the challenge before us is clear: how do we harness the potential of AI while mitigating its risks? The answer will require not just technological innovation, but also careful consideration of our values as a society.

The race for AI dominance is undoubtedly on, but as we sprint towards that future, we must not lose sight of the world we’re leaving in our wake. The true measure of AI’s success may not be in the power of its algorithms or the scale of its infrastructure, but in how it enhances the human experience for all, not just a privileged few.

Add Comment