Artificial intelligence (AI) is rapidly transforming our world, from revolutionizing healthcare to automating industries and powering virtual assistants. Yet, alongside its immense potential lies a shadow of risks that, if left unchecked, could have dire consequences. To harness the power of AI for good, we must acknowledge and address these risks proactively.

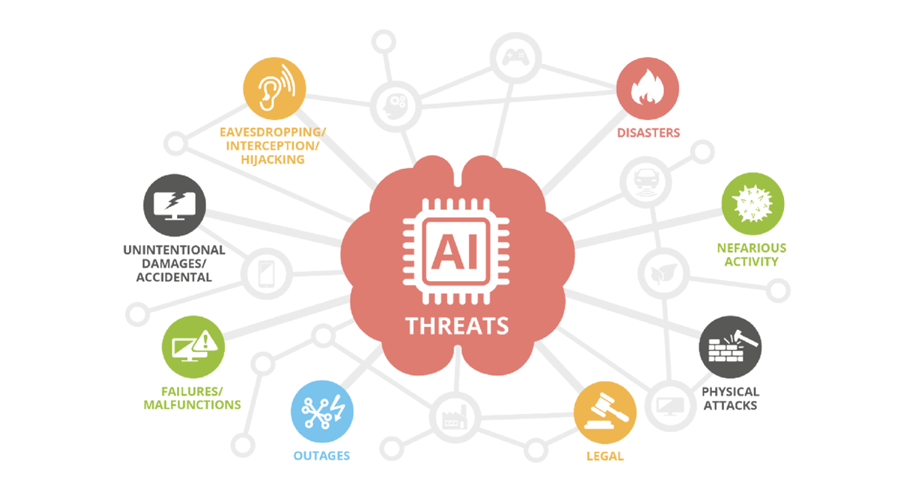

The Looming Threats:

AI comes with several risks that must be addressed:

- Bias and discrimination: AI algorithms trained on biased data can perpetuate and amplify existing societal inequalities. Imagine a hiring system favoring certain demographics based on biased historical data, perpetuating unfair hiring practices.

- Privacy erosion: AI’s insatiable appetite for data raises concerns about privacy infringement. Facial recognition technology, for example, can be misused for mass surveillance, eroding individual rights and freedoms.

- Job displacement: AI automation threatens to displace millions of workers, particularly in repetitive, data-driven fields. This raises concerns about economic inequality and social unrest.

- Weaponization and autonomous warfare: The integration of AI into autonomous weapons systems raises ethical and legal quandaries. Imagine self-learning drones making life-or-death decisions on the battlefield, blurring the lines of human responsibility.

- Existential threats: While still a distant possibility, some experts warn of the potential for advanced AI to pose an existential threat to humanity if its goals misalign with ours.

Building Safeguards:

Fortunately, we are not powerless against these risks. By implementing robust safeguards and fostering responsible AI development, we can mitigate these threats and ensure AI’s positive impact on our world. Here are some key strategies:

- Transparency and explainability: AI systems should be transparent, allowing users to understand how decisions are made and why. Black-box algorithms shrouded in secrecy erode trust and fuel potential misuse.

- Fairness and non-discrimination: AI algorithms must be rigorously tested for bias and continuously monitored to ensure fair treatment for all. Diverse teams developing and deploying AI are crucial to achieving this goal.

- Accountability and human oversight: While AI can assist in decision-making, ultimate responsibility must always lie with humans. Clear lines of accountability and human oversight are essential to prevent AI from operating autonomously.

- Data governance and privacy protection: Robust data privacy regulations and responsible data collection practices are essential to safeguard individual rights and prevent misuse of personal information.

- Public engagement and education: Open communication, public education, and stakeholder participation are key to fostering trust and shaping responsible AI development.

The Road Ahead:

Ensuring AI safety is not a one-time endeavor but an ongoing process requiring continuous vigilance and adaptation. Governments, industry leaders, academics, and civil society all have vital roles to play in shaping a future where AI serves humanity, not the other way around. By embracing ethical principles, robust governance, and continuous research, we can navigate the labyrinth of AI risks and unlock its vast potential for good.

As AI continues advancing at a breakneck pace, it is crucial we acknowledge both sides of this double-edged sword. With vigilance, responsibility and cooperation, we can mitigate the risks and harness AI as a force for good, improving medicine, education, sustainability and more for all people. But we must act quickly and with care – AI will not wait for us to prepare.

Key AI Risks and Threats

Understanding the key risks posed by artificial intelligence is the first step toward managing them responsibly. Here are some of the main threats experts warn we must address:

Algorithmic Bias and Discrimination

Since AI systems learn from data, any biases and inaccuracies in that data get propagated through algorithms. This can lead to unfair and unethical decision-making that amplifies discrimination against minorities and marginalized communities.

Lack of Transparency and Explainability

AI systems utilizing machine learning can behave like “black boxes” where even their developers struggle to explain internal workings. This lack of transparency erodes trust and accountability.

Privacy and Mass Surveillance Concerns

AI depends on data which may compromise privacy if improperly regulated. Technologies like facial recognition can enable mass surveillance when deployed irresponsibly.

Job Losses and Economic Disruption

As AI matches or exceeds human capabilities in certain tasks, many jobs could become redundant leading to displacement and unemployment. Proactive policies are needed to manage this transition.

Lethal Autonomous Weapons

Development of AI weapons systems that can select and fire on targets without human oversight raise ethical, moral and legal concerns. Rules governing this technology are still being formulated.

Superintelligence and Existential Risk

While still speculative, some experts warn of the possibility that a super-intelligent AI could escape human control and potentially pose an existential threat if its goals and ethics do not align with humanity’s interests.

Strategies for Responsible AI Governance

Thankfully measures can be taken to ensure AI’s development and use remains ethical, fair and focused on benefiting people. Some best practices include:

Ensuring Diversity and Inclusion

Having diverse teams build, manage and audit AI systems helps reduce harmful bias while also boosting innovation.

Instituting Checks and Balances

Humans must remain accountable and in control through governance processes providing oversight for AI systems, especially those making sensitive decisions.

Promoting Transparent and Explainable AI

Regulations can mandate AI transparency, traceability and result explainability to build public trust while avoiding “black box” algorithms.

Developing Robust Privacy Standards

Strong legal privacy safeguards for data collection, usage and sharing are important to protect individuals’ rights.

Creating Flexible Education Policies

Proactive investment in education and job training helps the workforce transition and benefits from AI-driven economic growth.

What other strategies would you recommend technology companies and governments prioritize to keep AI safe and ethical even as it grows more advanced and integrated into society?

Add Comment