The rapid advancement of artificial intelligence (AI) brings immense potential across sectors. However, alongside the excitement lies growing concern over ethical issues like bias perpetuation through flawed data or models. Public trust depends on responsible and ethical AI development. That’s why auditing research outputs before public release is crucial.

This article will explore the urgent need for open ethical standards in auditing AI research. We’ll delve into key challenges and outline a framework focused on responsibility and transparency to build public trust.

The Necessity of Auditing AI Research

Imagine an AI system designed to assess loan applications that inadvertently discriminates due to biases in its training data. Such alarming scenarios are not hypothetical. Biases can lead to unfair outcomes, reinforce inequalities, and erode public trust.

AI research auditing addresses this by systematically evaluating datasets and models for potential issues like:

- Data representativeness – Are datasets diverse and inclusive or perpetuate biases?

- Algorithmic fairness – Do algorithms introduce biases themselves?

- Privacy and security – Are sensitive data and systems protected?

- Explainability – Can decisions be clearly understood and explained without discrimination?

Auditing promotes:

- Ethical development by identifying issues early and fostering accountability

- Public trust through demonstrations of ethical commitment

- Improved quality and robustness by addressing vulnerabilities

Key Challenges in AI Research Auditing

While critical, practical auditing challenges exist:

- Lack of widely accepted ethical standards makes objective assessment difficult

- Thorough auditing requires significant expertise, time and computational resources

- Confidentiality concerns may limit sharing of sensitive data/model details

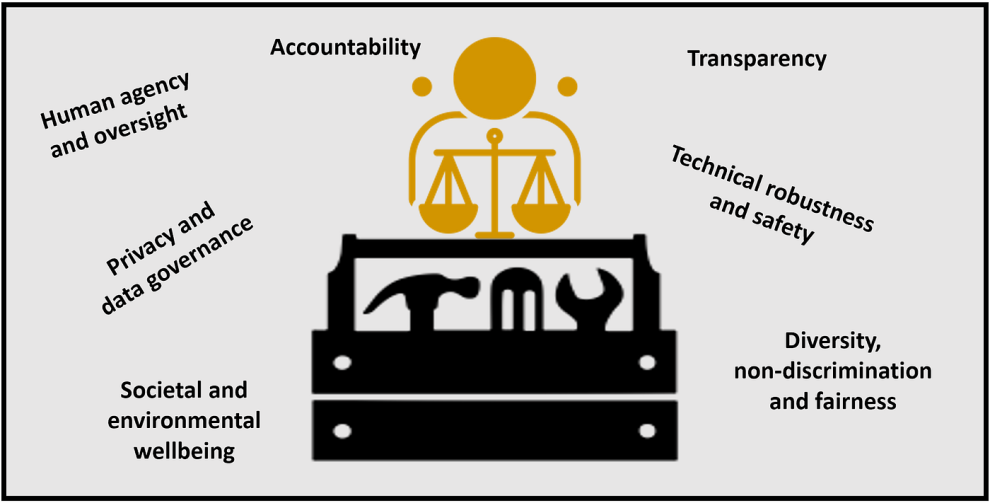

A Framework for Open, Ethical Standards

Overcoming these challenges requires building an open, collaborative auditing framework focused on clear standards, transparency and explanation.

1. Develop Standardized Guidelines

Collaborations between stakeholders like researchers, policymakers and industry can establish comprehensive ethical standards and auditing methodologies.

2. Promote Open Data and Tools

Encouraging sharing of code, data and auditing tools fosters collaboration and expedites auditing.

3. Invest in Auditing Infrastructure

Initiatives creating centralized auditing resources and best practices support comprehensive, efficient auditing.

4. Prioritize Transparency and Explanation

Researchers should develop explainable models and document auditing thoroughly to build understanding and trust.

The Path Towards Responsible AI Development

Auditing AI research is a crucial step towards responsibility. By collaborating to establish open ethical standards, promote transparency, and foster a culture of accountability, we can ensure AI serves as an inclusive, fair force for good.

This article aimed to highlight the urgent need for ethical auditing standards. But it’s just a starting point. Further discussions around specific principles, existing frameworks and expert guidance can enrich dialogues on responsible AI advancement. Because the future depends on it.

Add Comment