A recent security lapse in the ChatGPT for Mac app has raised concerns about user privacy and the potential for misuse of sensitive information. Developed by OpenAI, ChatGPT is a powerful language model that allows users to have conversations with an AI chatbot. However, the discovery of unencrypted files containing user queries has exposed a critical security flaw and sparked a debate about data protection in the age of artificial intelligence.

A Convenience Turned Liability: Unencrypted Files and Exposed Data

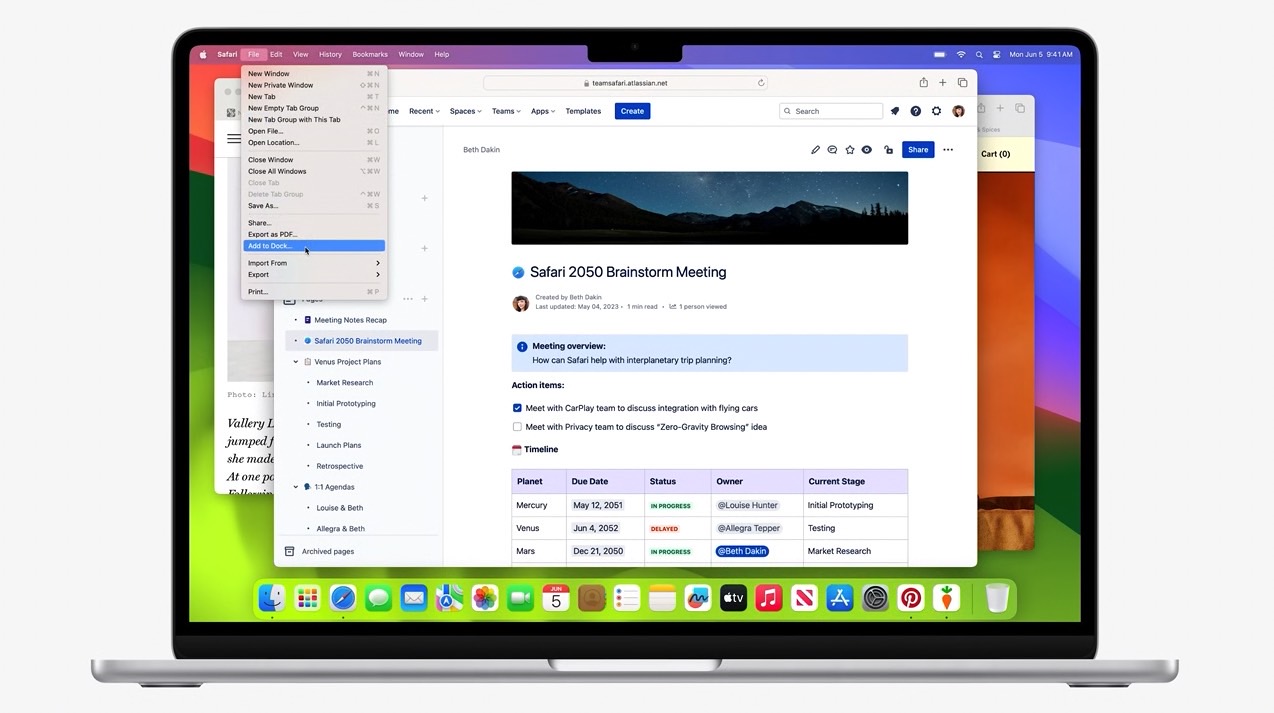

The issue came to light when a developer, Pedro Vieito, discovered that the ChatGPT for Mac app was storing user conversation history and queries in plain text files within the application’s library folder. This lack of encryption meant anyone with access to the device, including malware or malicious apps, could potentially read the contents of these files.

Here’s a breakdown of the security lapse:

-

Plain Text Files: User queries and conversation history were stored in unencrypted text files, leaving them vulnerable to unauthorized access.

-

Lack of Sandboxing: The app reportedly bypassed Apple’s sandboxing protocols, which typically isolate applications and prevent them from accessing data from other apps.

-

Potential Consequences: The exposed data could contain sensitive information depending on user interaction with the ChatGPT chatbot. This raises concerns about privacy breaches and the misuse of personal data.

This security flaw highlights the importance of robust data encryption practices, especially when dealing with potentially sensitive user information.

What Information Was Exposed? Understanding the Potential Harm

The exact nature of the exposed data remains unclear. However, based on ChatGPT’s functionalities, some potential concerns include:

-

Personal Information: Users might have inadvertently included personal details like names, locations, or even financial information in their conversations with the chatbot.

-

Sensitive Queries: ChatGPT can be used for various purposes, and some users might have engaged in conversations involving sensitive topics like healthcare concerns or financial anxieties.

-

Privacy Violations: The mere act of recording and storing user conversations without proper encryption constitutes a privacy violation, regardless of the specific content.

The potential consequences for users who unknowingly exposed such information can be significant, ranging from identity theft to reputational damage.

OpenAI’s Response: Patching the Leak and Addressing User Concerns

OpenAI, the developers of ChatGPT, responded swiftly to the discovery of the security flaw. Here’s a look at their actions:

-

Issuing a Patch: An update was released that encrypts user conversation data and addresses the sandboxing issue.

-

Acknowledging the Problem: OpenAI released a statement acknowledging the security lapse and apologizing to users for any inconvenience caused.

-

Importance of Transparency: Moving forward, OpenAI needs to emphasize transparency regarding data collection practices and user privacy measures.

While OpenAI has taken steps to address the issue, the incident raises concerns about the potential for misuse of user data by AI developers. Increased transparency and robust data security protocols are crucial for building trust with users.

Beyond ChatGPT: A Broader Conversation on AI and User Privacy

The ChatGPT security lapse is not an isolated incident. As AI technology continues to evolve, concerns about user privacy and data security will become increasingly important. Here’s a look at the broader implications:

-

The Need for Regulation: Clear regulations are needed to ensure that AI developers implement proper data security measures and obtain informed consent from users regarding data collection practices.

-

Transparency in AI Development: Increased transparency regarding AI algorithms and how user data is used is essential for building trust between users and AI developers.

-

User Education: Empowering users with knowledge about potential risks associated with AI interactions and how to protect their privacy is crucial in the age of artificial intelligence.

The ChatGPT incident serves as a stark reminder of the importance of prioritizing user privacy in the development and deployment of AI applications. Moving forward, a collaborative effort involving developers, regulators, and users is necessary to ensure the responsible and ethical use of AI technology.

Lessons Learned: The Road to Responsible AI Development

The security lapse in ChatGPT for Mac highlights the need for a multi-pronged approach to responsible AI development:

-

Security Audits: Regular penetration testing and security audits are crucial for identifying and addressing vulnerabilities in AI applications before they are deployed.

-

User Privacy by Design: Data privacy considerations should be embedded throughout the entire AI development process, not as an afterthought.

-

Building Trust with Users: Open communication, clear data practices, and robust security measures are essential for building trust with users when it comes to AI interactions.

The potential of AI technology is undeniable, but it must be balanced with ethical considerations and a commitment to user privacy. By learning from the shortcomings of ChatGPT, the AI development community can work towards a future where AI serves humanity without compromising individual privacy.

Add Comment