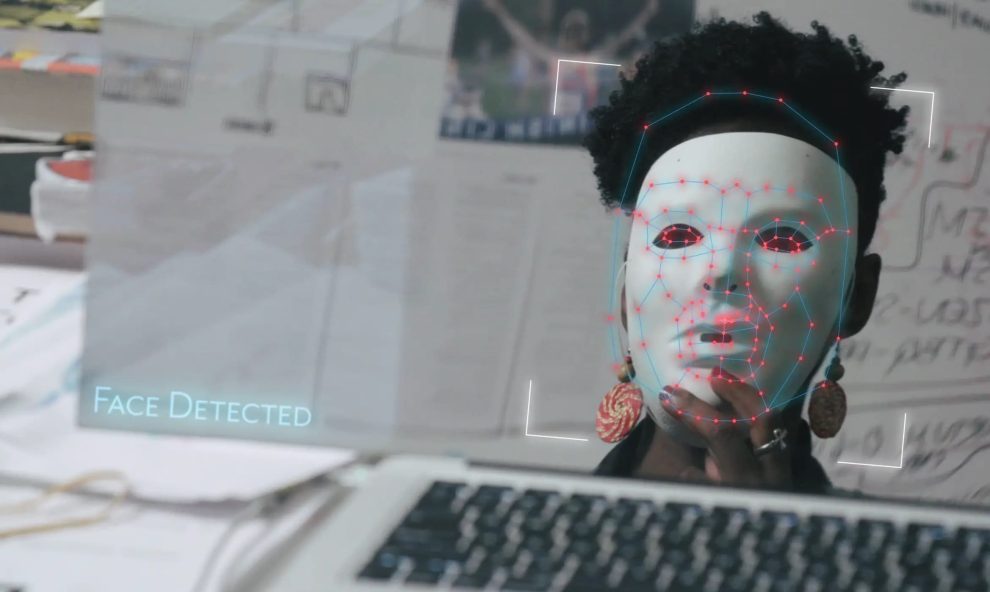

Facial analysis systems (FAS) are rapidly advancing, powering everything from unlocking smartphones to identifying criminals. However, these systems often fail minority groups and perpetuate real-world harms due to biases rooted deep in their training data and algorithms.

In this extensive blog post, we’ll shine a spotlight on the inclusivity crisis in facial analysis, delving into why bias occurs and how it impacts society. More importantly, we’ll outline actionable solutions to evaluate and mitigate bias, highlighting crucial steps towards developing responsible AI that empowers all groups fairly and equitably.

The Data Dilemma: Where Bias Stem From

Most facial analysis systems today are trained on datasets lacking diversity, overrepresenting particular demographics while excluding others. Even seemingly diverse datasets contain subtle sampling biases skewed towards specific lighting conditions or facial features. On top of this, human annotators unconsciously inject their own biases into data labeling, leading systems to make decisions based on race, skin tone or other attributes rather than objective facial traits.

It’s no surprise then that FAS stumble when presented with underrepresented faces. Just like language models solely trained on Shakespeare falter at modern slang, FAS adept at recognizing young Caucasian males struggle at accurately analyzing women, elderly and minority groups. The seeds of bias are often planted early in the machine learning pipeline.

Key Sources of Bias in FAS Data

- Lack of diversity in datasets like LFW and CelebA

- Unrepresentative sampling within datasets

- Implicit human biases during data annotation

The Real-World Impact: When Bias Bites

The fallout from biased facial analysis systems extends far beyond technical inaccuracies. When deployed in law enforcement, HR, banking and other sensitive applications, they risk amplifying discrimination and causing lasting damage.

Potential Consequences Include:

- Increased profiling and surveillance: Systems may disproportionately target specific minority groups, leading to unfair discrimination.

- Privacy violations: Struggling to differentiate diverse faces can increase misidentification and unauthorized tracking.

- Psychological harm: Constant exclusion or false accusations can negatively impact minority users’ self-esteem and mental health.

There are also financial motivations for improving inclusivity. With growing calls for ethical AI and expanding minority markets, businesses implementing biased technologies risk damaging their brand reputation, losing consumer trust or facing legal repercussions.

Evaluating Inclusivity in Facial Analysis Datasets

Spotting and resolving bias requires scrutinizing FAS development and deployment through an inclusivity lens. Here are some best practices for analysis:

1. Audit Datasets

Thoroughly probe datasets for demographic skews, sampling inconsistencies and annotation tendencies that could introduce bias, using tools like:

- Fairness metrics to quantify demographic representation

- Data profiling to uncover labeling patterns

- Manual reviews to sample misclassifications

2. Seek Outside Perspectives

Consult domain experts, sociologists and community advocates to uncover blindspots in data and product design. Incorporate feedback through participatory design.

3. Test Broadly

Evaluate model performance across gender, skin tone, age and other parameters with stratified testing. Look for differentials in accuracy, precision and false positives/negatives.

Strategies to Improve Inclusivity

Once gaps are identified, what next? Here are some promising techniques to debias datasets and models:

1. Augment Underrepresented Groups

Actively oversample minority demographics and use generative models to synthesize additional examples, balancing overall distributions.

2. Adjust Thresholds

Tune confidence thresholds individually for subgroups to account for variability in precision rates.

3. Employ Techniques like Federated Learning

Decentralize model training to incorporate diverse facial data while preserving user privacy.

4. Design Fairness Criteria

Incorporate quantitative benchmarks for subgroup accuracy into model development and evaluate against them.

Beyond Technical Solutions: Cultivating Responsible AI

While the techniques above help, debiasing facial analysis encompasses broader initiatives to ensure technology works equitably for all communities. Here are some tenets of responsible AI development:

1. Center Impacted Voices

Actively seek input from minority groups through participatory analysis, audits and feedback channels. Incorporate leur concerns into requirements.

2. Radical Transparency

Openly publish model performance analyses segmented by gender, race and age to maintain accountability.

3. Develop Ethical Safeguards

Thoroughly assess use cases to minimize potential harms from misuse. For instance, advise against using certain facial analysis features in sensitive applications.

4. Champion from Leadership

Make diversity, equity and inclusion core pillars woven through organizational culture. Set representation goals and incentivize teams to meet them.

The Road Ahead: AI to Empower All

With conscious, sustained effort across the AI pipeline, facial recognition can uplift rather than oppress minorities. The strategies outlined here form a blueprint for change – now is the time to put them into practice.

We have a shared duty to create an inclusive future with AI. Join us on this mission.

Add Comment