Have you ever engaged in a conversation with a powerful language model only to find that its responses don’t quite align with your expectations? The key to unlocking the true potential of these AI systems lies in the art of prompt engineering. In this comprehensive guide, we’ll explore what prompt engineering is, why it’s important, and how you can master the techniques to guide large language models (LLMs) towards generating the desired output.

What is Prompt Engineering?

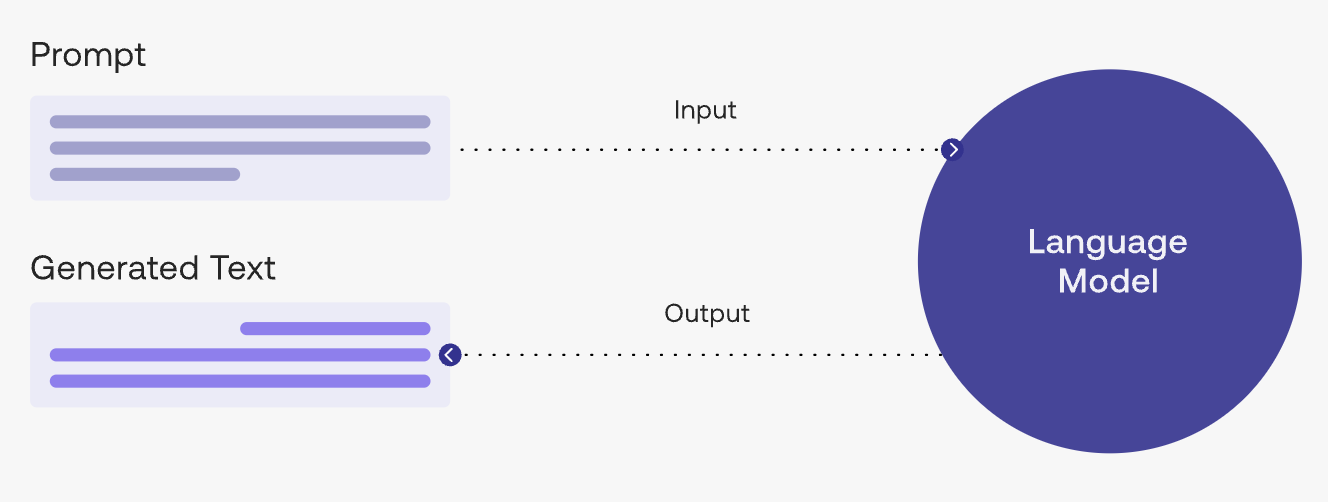

Prompt engineering is the practice of crafting clear, informative, and well-structured instructions that guide LLMs to produce the desired output. It involves understanding how these AI systems process and generate text based on the input they receive. By carefully designing prompts, you can direct LLMs to perform a wide range of tasks, such as creative writing, language translation, question answering, and more.

The Importance of Prompt Engineering

LLMs are trained on vast amounts of text data, allowing them to generate human-like responses. However, their ability to understand the nuances of human language and intent can sometimes fall short. This is where prompt engineering comes into play. By providing well-crafted prompts, you can bridge the gap between the LLM’s capabilities and your specific requirements, ensuring that the generated output aligns with your goals.

How Does Prompt Engineering Work?

To effectively engineer prompts, it’s essential to understand the core principles behind the process:

- Know Your LLM: Familiarize yourself with the strengths and limitations of the specific LLM you’re working with. Different models may have varying capabilities and require tailored approaches.

- Define Your Task: Clearly outline the desired outcome you want to achieve with the LLM. Whether it’s generating creative content, summarizing information, or answering questions, having a well-defined goal is crucial.

- Craft the Perfect Prompt: This is where the magic happens. When constructing your prompt, consider the following:

- Use clear and concise language that the LLM can easily understand.

- Provide relevant context or examples to guide the LLM towards the desired output.

- Specify the desired format, style, and tone of the generated content.

- Iterate and Refine: Prompt engineering is an iterative process. Analyze the LLM’s output, identify areas for improvement, and refine your prompts accordingly. Continuous experimentation and refinement will help you achieve the best results.

Advanced Prompt Engineering Techniques

As you delve deeper into prompt engineering, you can explore more advanced techniques to enhance the quality and efficiency of your prompts.

- Prompt Chaining: Break down complex tasks into a series of smaller, sequential prompts. This allows you to guide the LLM through a step-by-step process, ensuring more accurate and coherent outputs.

- Few-Shot Learning: Provide the LLM with a few high-quality examples that demonstrate the desired output. By learning from these examples, the LLM can better understand the task at hand and generate more relevant content.

- Fine-Tuning: For specific use cases, you can fine-tune an LLM on a dataset tailored to your domain or task. This customization process can significantly improve the model’s performance and understanding of your prompts.

Real-World Applications of Prompt Engineering

Prompt engineering finds applications across various domains, including:

- Content Creation: Generate compelling marketing copy, product descriptions, or social media posts that align with your brand’s voice and target audience.

- Language Translation: Craft prompts to improve the accuracy and fluency of machine-translated text, ensuring better communication across languages.

- Code Generation: Use prompts to automate repetitive coding tasks or generate code snippets based on specific requirements.

- Research and Education: Leverage LLMs to summarize complex research papers, generate educational content, or provide answers to student queries.

The Future of Prompt Engineering

As LLMs continue to evolve, the field of prompt engineering is poised for exciting advancements.

- Standardized Prompt Libraries: The development of shared libraries containing effective prompts for various tasks could streamline the prompt engineering process and enable easier collaboration among users.

- User-Friendly Interfaces: The emergence of intuitive interfaces that guide users through the prompt engineering workflow could make LLMs more accessible to a wider audience, regardless of technical expertise.

- Integration with AI Assistants: Prompt engineering techniques could be integrated into AI assistants, allowing users to provide natural language instructions for complex tasks and receive accurate, context-aware responses.

Addressing Common Concerns and Best Practices

While prompt engineering offers immense potential, it’s important to be aware of certain concerns and follow best practices:

- Mitigating Bias: LLMs can sometimes reflect societal biases present in their training data. When crafting prompts, be mindful of potential biases and strive to create neutral, inclusive content.

- Ensuring Accuracy: Prompt engineering can be used to generate misleading or false information if not used responsibly. Always fact-check the generated content and promote the importance of accuracy and integrity.

- Ethical Considerations: As with any powerful technology, prompt engineering raises ethical concerns. Prioritize transparency, explainability, and accountability when working with LLMs. Document your prompts and be responsible for the content generated based on them.

To further enhance your prompt engineering skills, consider the following best practices:

- Start Simple: Begin with clear, straightforward prompts and gradually increase complexity as you gain experience.

- Iterate and Refine: Continuously test and refine your prompts based on the generated outputs. Experimentation is key to achieving optimal results.

- Provide Feedback: Many LLMs allow users to provide feedback on the generated content. Utilize this feature to guide the model towards better understanding your preferences and requirements.

- Stay Updated: Keep yourself informed about the latest advancements, techniques, and best practices in prompt engineering. Engage with online communities, follow research papers, and attend relevant conferences or workshops.

Conclusion

Prompt engineering is a powerful tool that enables you to harness the full potential of large language models. By understanding the core principles, applying advanced techniques, and following best practices, you can guide LLMs to generate accurate, relevant, and creative content that meets your specific needs.

As you embark on your prompt engineering journey, remember to approach it with curiosity, experimentation, and a commitment to responsible use. The art of crafting effective prompts is an ongoing process that requires continuous learning and refinement.

So, go ahead and start whispering to the machine. With the right prompts, you can unlock a world of possibilities and revolutionize the way you interact with language models. Happy prompt engineering!