The emergence of artificial intelligence (AI) tools in healthcare, particularly radiology and pathology, is viewed with both enthusiasm and apprehension. While AI promises improved efficiency and accuracy, fears of automation and job displacement loom large for human experts like radiologists and pathologists.

But the future need not be a zero-sum game between humans versus machines. Instead of seeing AI as a competitor, we must shift perspectives towards collaboration and augmentation. This is where explainable AI (XAI) enters as a game-changer, enabling synergistic partnerships between AI and human specialists to realize healthcare’s full potential.

The Promise and Perils of AI in Radiology and Pathology

AI has demonstrated remarkable results across medical imaging and digital pathology applications, including:

- Breast cancer prediction from mammogram scans

- Lung disease diagnosis from chest X-rays

- Brain tumor detection from MRI scans

- Skin cancer classification from dermoscopic images

By automating time-consuming tasks like measurement, classification, and detection, AI allows radiologists and pathologists to focus more on complex analysis, consultations, and difficult cases.

However, barriers to widespread adoption still exist:

- Black box design: Most AI models act as black boxes, offering outputs without explanations behind them.

- Trust deficiencies: This opacity exacerbates trust issues, hindering acceptance amongst radiology and pathology experts.

- Risk of deskilling: If AI takes over a bulk of diagnostic work, some fear it may erode human skills over time.

This is where explainable AI offers the best of both worlds – augmented intelligence without losing human oversight and control.

How Explainable AI Builds Trust

Also known as interpretable AI, explainable AI aims to make model workings and reasons transparent to users. Unlike impenetrable black boxes, XAI:

- Highlights key features that influenced model output

- Pinpoints regions of images vital for predictions

- Conveys model confidence for added context

By bridging the gap between predictions and reasoning, explainable AI establishes:

- Transparency: Users understand model decision processes

- Trust: Confidence improves in model predictions

- Responsibility: Accountability for AI-assisted diagnosis is shared

Benefits of XAI For Radiology and Pathology

As AI becomes entrenched across imaging and digital pathology, explainable models can enhance specialist roles in the following ways:

1. Improved Diagnoses and Patient Outcomes

Explainable AI serves not to replace expert radiologists or pathologists but to complement their skills. By working synergistically, human specialists supported by AI tools can achieve greater diagnostic precision. This further enables:

- Earlier disease detection leading to timely treatment

- Reduced diagnostic errors and associated malpractice risk

- Tailored and optimized treatment plans reflecting subtleties possibly missed in standard AI models

2. Increased Workflow Efficiency

While improved accuracy is invaluable, efficiency matters too, given chronic radiologist shortages, high caseloads, and burnout issues. Explainable AI can automate time-intensive tasks, freeing specialists to focus on more critical work like:

- Complex case consultations with colleagues

- Multidisciplinary collaboration for challenging diagnoses

- Direct communication and counseling of patients

It also supports prioritization of workflows, allowing radiologists and pathologists to triage cases efficiently.

3. Enhanced Understanding of Disease

By conveying subtle insights not discernible to the naked eye, explainable AI serves as an invaluable educational aide for radiologists and pathologists to refine skills. Over time, XAI-assisted diagnosis enables specialists to become attuned to minute pathological indicators through consistent exposure, analysis, and learning.

Radiologists and pathologists also play a crucial role in continuously honing AI tools by:

- Identifying blind spots

- Seeking explanatory justification

- Providing contextual refinement

This two-way feedback loop leads to superior AI design while allowing human experts to evolve professionally as well.

Mitigating Automation’s Disruptive Impact

Automation angst and fears of vocation obsolescence are understandable in an AI transformed landscape. But these may be overstated provided specialists take proactive measures to adapt professionally rather than resist progress. Constructive ways to mitigate disruption include:

Upskilling in New Areas

As AI handles an increasing bulk of repetitive analytic tasks, radiologists’ and pathologists’ roles will become more consultative. Enhancing skills in communication, stakeholder interactions, data science, critical thinking, and change management will be key to continued relevance and growth.

Specializing in Novel Subdomains

Niche specializations like interventional radiology, genomic pathology, and microvascular imaging represent complex areas where AI struggles. Pursuing such subspecialties allows radiologists and pathologists to cement indispensable expertise.

Transitioning to Hybrid Roles

Blending clinical and technology capabilities by becoming “radiologist data scientists” or “computational pathologists” offers new career avenues. Such hybrids can guide AI development to align with clinical needs and constraints.

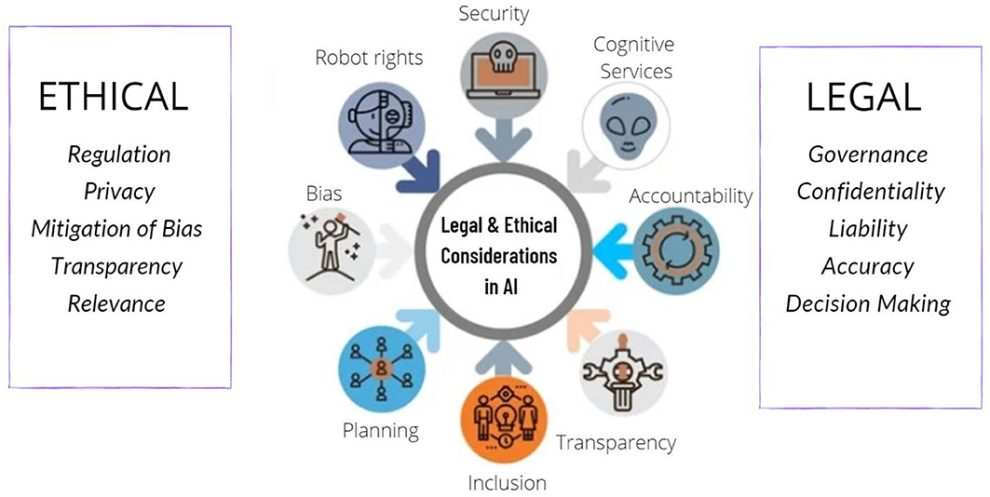

Advocating Responsible AI Adoption

Radiologists and pathologists should have a seat at the table in healthcare AI conversations. Getting involved with institutional AI ethics boards and policy groups allows specialists to shape AI deployment, ensuring it augments without detrimental disruption.

Education Holds the Key

Ultimately, smoothing the transition towards an AI empowered future for radiology and pathology hinges on continuous learning. Some recommendations include:

- AI Literacy: Developing expertise in AI fundamentals, applications, and implications.

- XAI Upskilling: Understanding algorithm interpretability tools for improved human-AI collaboration.

- Data Analytics: Enhancing data analysis skills to identify promising AI opportunities and pitfalls.

Academic institutions and professional medical societies must make such learning accessible through integrated curricula, online modules, and applied education. Hands-on hackathons, Datathons, and interactive workshops can also catalyze skill development.

But more importantly, human specialists like radiologists and pathologists should proactively seek learning for career resilience. The onus lies on individuals to view AI as an opportunity for elevation rather than a threat.

The Outlook for Human-AI Collaboration

AI disruption may feel imminent and inevitable to some radiologists and pathologists. But obsolescence is unlikely in the foreseeable future. The volume of imaging and histopathology data will only expand, along with demand for accurate diagnosis and communication.

Rather than dread AI, welcoming it as an amplifying partner to address healthcare’s complexities is key. Explainable models in particular allow radiologists and pathologists to practice at the top of their competencies with standardized tasks managed by AI.

By openly embracing this human-machine collaboration, radiology and pathology can continue fulfilling their life-saving roles enhanced by AI’s untiring assistance.

Add Comment