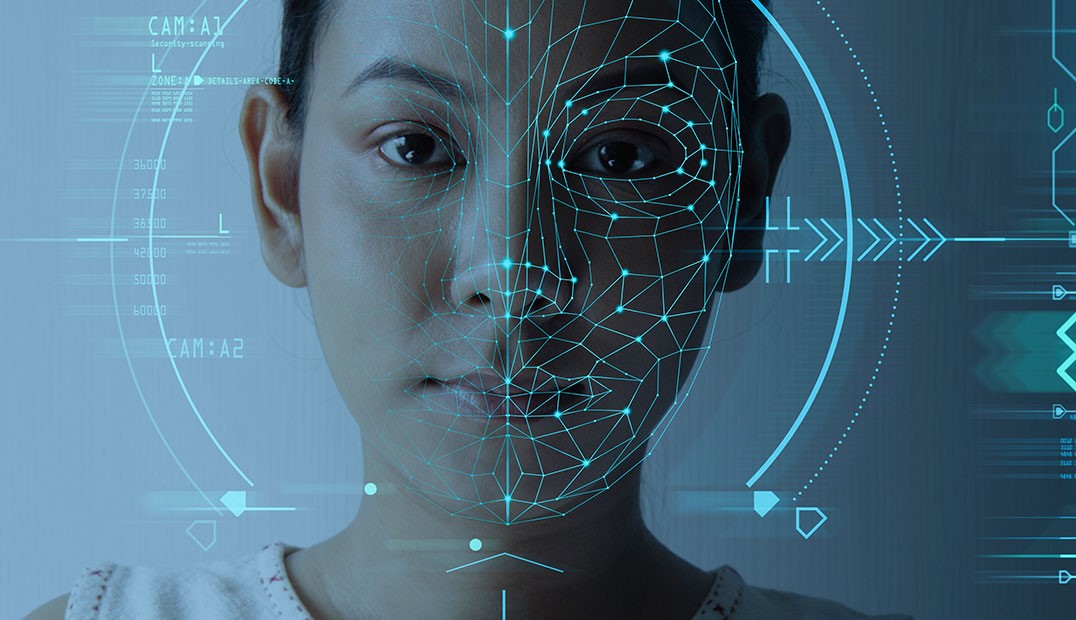

Facial recognition AI is rapidly transforming various aspects of our lives. From unlocking smartphones to passing through airports, this technology brings great convenience. However, with great power comes great responsibility. Facial recognition AI also carries the risk of demographic exclusion and bias if not designed inclusively.

Studies have shown that facial recognition systems can struggle with accuracy for certain groups like people of color, women, and ethnic minorities. Without inclusive design, this can lead to discriminatory outcomes and the exacerbation of existing societal inequalities.

In this article, we’ll explore the root causes of bias in facial AI and provide best practices for building inclusive systems to benefit all groups equally.

Understanding the Causes of Bias in Facial Recognition AI

Before examining solutions, it’s important to unpack the various factors that can introduce bias into facial AI:

Data Bias

If the training data itself is biased and lacks diversity, those biases propagate through the model. For example, an AI predominantly trained on light-skinned male faces will likely struggle with darker skin tones.

Algorithm Bias

Even with diverse data, the algorithms powering facial AI can contain inherent biases. These can stem from choices made during development or unintended consequences in complex neural networks.

Societal Bias

Societal biases also creep into facial AI systems, influencing factors like data selection, performance metrics, and descriptive language used.

Best Practices for Building Inclusive Facial Recognition AI

Mitigating bias requires a concentrated effort across the entire development pipeline. Here are 5 best practices for building inclusive facial recognition systems:

Diverse Training Data

The bedrock of bias mitigation starts with diverse and representative training data reflecting gender, ethnicity, age – all demographic groups the system will encounter.

Employ Debiasing Techniques

Augmenting data and algorithm adjustments help debias systems. For example, synthetically generating minority samples or explicitly optimizing for fairness across groups.

Increase Algorithmic Transparency

By inspecting model decisions through explainable AI techniques, developers can identify areas needing adjustment for fairness.

Ongoing Human Oversight

Humans must continually audit system decisions for bias, intervene when necessary, and establish accountability.

Continuous Monitoring and Improvement

Regularly measure performance across demographic groups, taking corrective actions to address any identified bias.

Looking Beyond Technology to Address Societal Biases

While technology has a crucial role, addressing societal biases is also key to unlocking the power of inclusive facial AI:

Raising Public and Policymaker Awareness

Educating society about both the promise and pitfalls of facial AI can encourage responsible development and help build trust.

Involving Diverse Stakeholders

Seeking input from various demographic groups in system design ensures different perspectives are represented.

Establishing Ethical Guidelines

Ethical guardrails emphasizing fairness, accountability, and transparency guide responsible facial AI deployment.

Building an Inclusive Facial AI Future

By following modern best practices and emphasizing inclusive design, facial recognition technology can empower people from all backgrounds. But achieving this requires continued collaboration between technologists, policymakers, and diverse public stakeholders.

If guided by ethical principles and responsible development, facial AI can become a transformative technology improving life for all, instead of reinforcing historical patterns of exclusion and bias.

Add Comment